History of RGB color model

I'm going to make an excursion into the history of the science of human perception, which led to the creation of modern video standards. Also I will try to explain frequently used terminology. In addition, I will briefly describe why the typical process of creating the game will more and more resemble the process used in the industry.

the

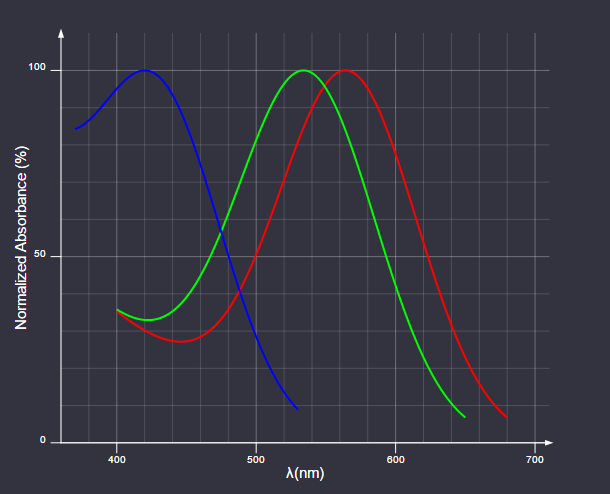

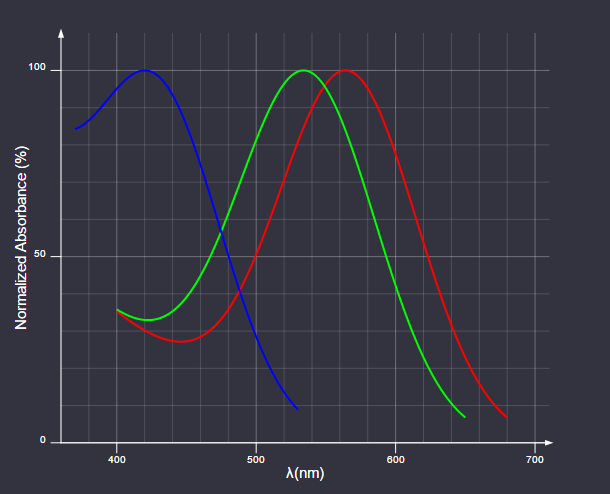

Today we know that the retina of the human eye contains three different types of photoreceptor cells called cones. Each of the three types of cones contain a protein from the protein family opsins, which absorb light in different parts of the spectrum:

the Absorption of light by opsins

Cones correspond to red, green and blue parts of the spectrum and are often referred to as long (L), medium (M) and short (S) according to the wavelengths to which they are most sensitive.

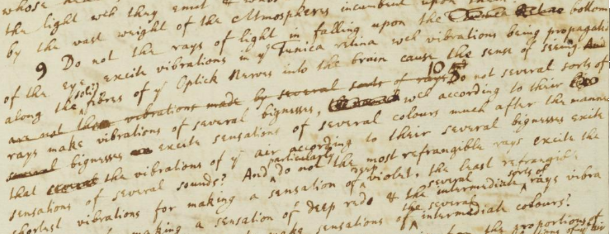

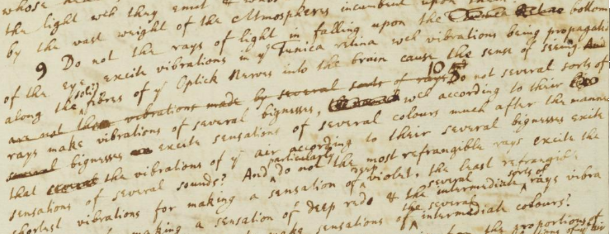

One of the first scientific papers on the interaction of light and the retina was the treatise "Theory Concerning Light and Colors" by Isaac Newton, written between 1670-1675. Newton had the theory that light with different wavelengths resulted in the resonance of the retina with the same frequency; these vibrations are then transmitted via the optic nerve to the "sensorium".

"the Rays of light, falling to the bottom of the eye excite vibrations in the retina, which propagate in the fibers of the optic nerves to the brain, creating the sense of sight. Different types of rays to create waves of different forces, which according to its power to excite sensations in different colors..."

(I recommend you read the scanned drafts of Newton to the web site of the University of Cambridge. Of course, I'm stating the obvious, but how he was a genius!)

More than a hundred years Thomas Jung came to the conclusion that as the resonance frequency is a property, depending on the system, to absorb light of all frequencies, in the retina there must be an infinite number of different resonant systems. Jung considered this unlikely, and reasoned that the number is limited to one system for red, yellow and blue. These colors were traditionally used in subtractive mixing of colors. In his own words:

In 1850 Hermann Helmholtz first got the experimental proof of the theory of Jung. Helmholtz asked the subject to compare the color of different samples of light sources, adjusting the brightness of several monochromatic light sources. He came to the conclusion that for comparison of all samples is necessary and sufficient three light sources: red, green, and blue part of the spectrum.

the

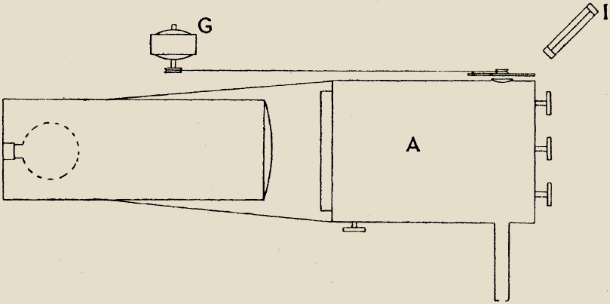

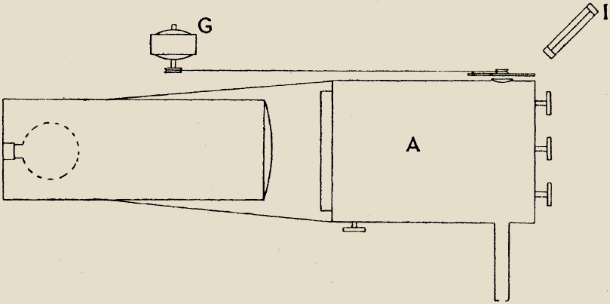

Fast forward to the beginning of the 1930s. By the time the scientific community had a reasonably good idea about the inner workings of the eye. (Although it took another 20 years to George Wald was able to experimentally confirm the presence and function of rhodopsin in the cone cells of the retina. This discovery led him to the Nobel prize for medicine in 1967.) Commission Internationale de L'eclairage (international Commission on illumination), CIE, has set the task of creating a comprehensive quantitative assessment of color perception by person. Quantification was based on experimental data collected by William David Wright and John Guild in the parameters, similar to that selected for the first time, Hermann Helmholtz. The base settings were chosen 435,8 nm for blue, 546,1 nm for green and 700 nm for red.

Experimental setup John Gilda, three knobs adjust the primary colors

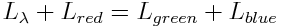

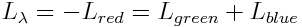

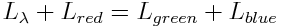

Due to the significant overlap of the sensitivity of M and L cones was impossible to correlate the selected wavelengths with blue-green portion of the spectrum. For "mapping" these colors as a starting point it was necessary to add a little basic red:

If we for a moment imagine that all the primary colors make a negative contribution, the equation can be rewritten as follows:

The result of the experiments was the table of the RGB triad for each wavelength that is visible as follows:

mapping of RGB colors in the CIE 1931

Of course, color with the negative red component cannot be displayed using the primary colors CIE.

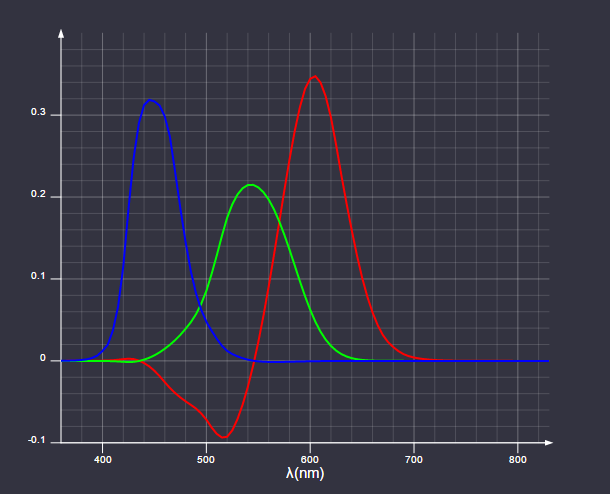

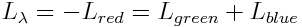

Now we can find trichrome coefficients for the light distribution of the spectral intensity S as the following inner product:

It may seem obvious that the sensitivity to different wavelengths it is possible to integrate in this way, but it actually depends on the physical sensitivity of the eye, linear with respect to the sensitivity wavelengths. This was empirically confirmed in 1853 by Hermann Grassmann, and the above integrals in modern formulation, known as the law of Grassman.

The term "color space" arose because the primary colors (red, green, and blue) can be considered as a basis of a vector space. In this space the different colors perceived by the person represented by the rays emanating from the source. The modern definition of a vector space introduced in 1888 Giuseppe Peano, but more than 30 years before James Clerk Maxwell had already used only originated the theory that later became linear algebra for the formal description trichromatism color system.

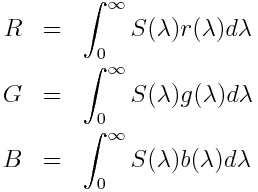

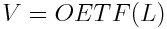

CIE decided that to simplify the calculations it will be more convenient to work with color space in which the coefficients of the primary colors is always positive. Three new primary colors is expressed in the coordinates of the RGB color space as follows:

This new set of primary colors is impossible to implement in the physical world. It's just a mathematical tool that simplifies working with color space. In addition to the coefficients of the primary colors have always been positive, the new space is arranged in such a way that the coefficient of the color Y corresponds to the perceived brightness. This feature is known as brightness CIE (more about it can be read in the great article Color FAQ Charles Poynton (Charles between poynton)).

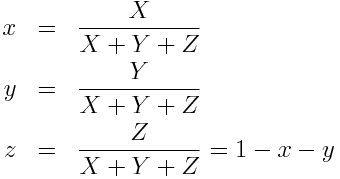

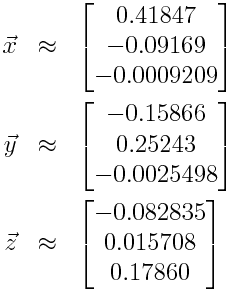

To simplify the visualization of the resulting color space, we perform the last conversion. Dividing each component by the amount of components we get a dimensionless quantity color, independent of its brightness:

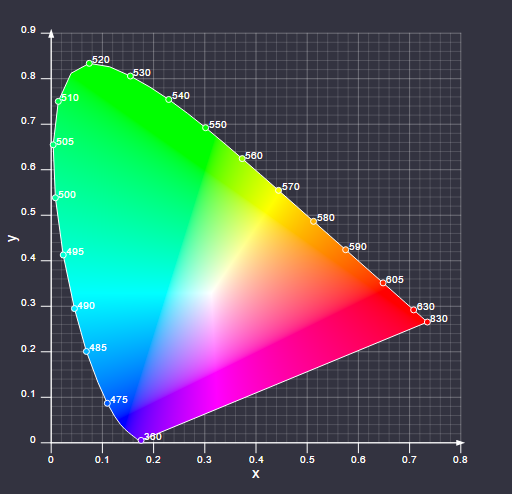

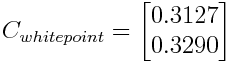

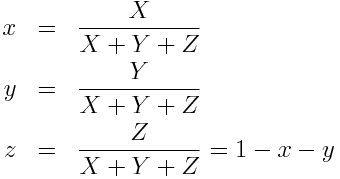

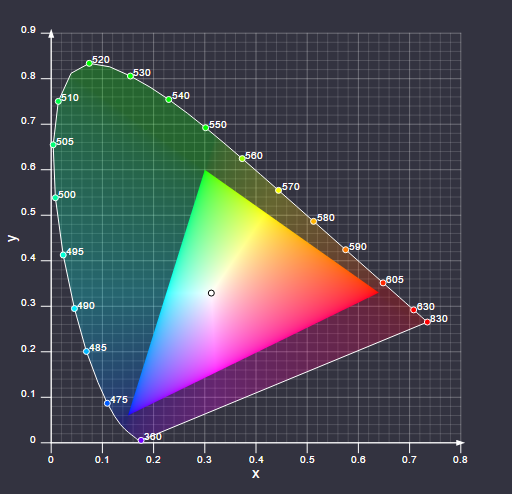

The coordinates x and y known as chromaticity coordinates, and together with the CIE luminance Y they constitute the color space is CIE xyY. If we plot on a graph the chromaticity coordinates of all colors with a given luminance, we get the following diagram, which you will be familiar:

Diagram of CIE 1931 xyY

And the last thing you need to know — that white is considered a color of the color space. In this system, display white color is the x and y coordinates of the colors that are obtained when all the coefficients of the primary colors RGB are equal.

Over time, several new color spaces, which in various aspects has made improvements in the CIE 1931 space. Despite this, the CIE xyY system is the most popular color space that describes the properties of the display device.

the

Before considering the standards, it is necessary to introduce and explain two more concepts.

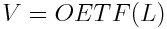

Opto-electronic transfer function (optical-electronic transfer function, OETF) determines how linear the light recorded by the device (camera) must be encoded in the signal, i.e. it is a function of the form:

Before V was an analog signal, but now, of course, it has digital encoding. Usually game developers are rarely faced with OETF. One example in which the function is important: the need to combine in-game video with computer graphics. In this case, you must know what OETF was recorded video, to restore the linear light and to properly blend it with computer imaging.

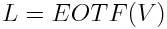

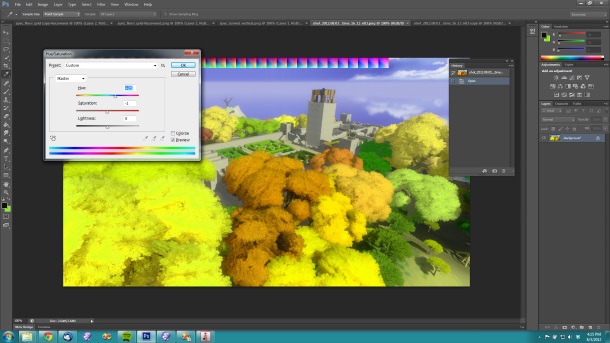

Electro-optical transfer function (electronic-optical transfer EOTF) OETF performs the opposite task, i.e. it determines how the signal will be converted into a linear light:

This feature is more important for game developers, because it defines how their content will display television screens and monitors users.

The concept of the EOTF and OETF, though related, but serve different purposes. OETF are needed to represent the captured scene, from which we then can reconstruct the original linear lighting (this concept is the frame buffer HDR (High Dynamic Range) normal game). What happens at the stages of production of conventional film:

the

A detailed discussion of this process will not be included in our article, but I recommend to study detailed formal description of the workflow ACES (Academy Color Encoding System).

Until now the standard process of the game is as follows:

the

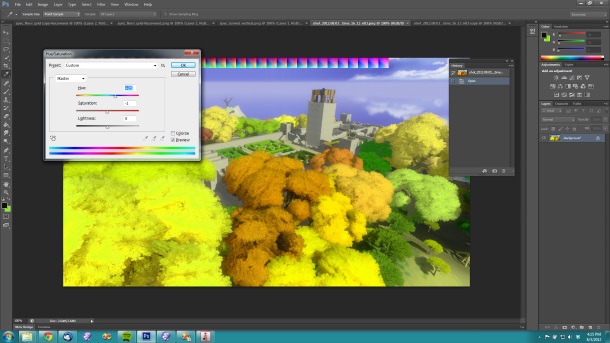

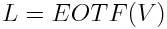

In most game engines use a method of color correction, the popularized presentation of Neti Hoffman (Naty Hoffman) "Color Enhancement for Videogames" from Siggraph 2010. This method was practical when used only the target SDR (Standard Dynamic Range), and he was allowed to use color correction software already installed on the computers of most artists, such as Adobe Photoshop.

the Standard workflow of color correction SDR (image belongs to Jonathan blow (Jonathan Blow))

After the introduction of HDR most of the games began to move to the process similar to that used in film production. Even in the absence of HDR is similar to the cinematic process allowed us to optimize the performance. Color correction in HDR means that you have the whole dynamic range of the scene. In addition, be possible some effects that were previously unavailable.

Now we are ready to consider different standards currently used to describe the formats of televisions.

the

Most of the standards related to the broadcasting of the video, released by the International telecommunication Union (International Telecommunication Union, ITU), a UN body, is mainly engaged in information technology.

recommendation ITU-R BT.709, more commonly called Rec. 709 is a standard that describes the properties of HDTV. The first version of the standard was released in 1990, the last in June 2015. The standard defines parameters such as aspect ratio, resolution, frame rate. These characteristics are familiar to most people, so I will not consider them and focus on the parts of the standard relating to color reproduction and brightness.

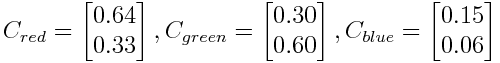

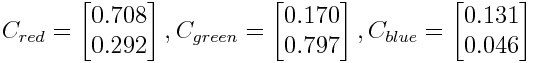

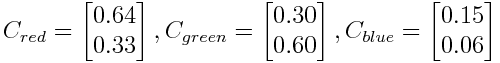

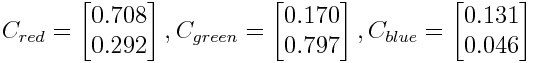

The standard describes in detail the color, the limited color space is CIE xyY. Red, green and blue light sources corresponding to the standard display should be chosen in such a way that they separate the chromaticity coordinates were as follows:

Their relative intensity should be set up so that white dot had a color

(This white point is also known as CIE Standard Illuminant D65 and the same seizure of the chromaticity coordinates of the spectral distribution of the intensity of normal sunlight.)

Properties of color can be visually represented as follows:

Coverage Rec. 709

The diagram area of color bounded by the triangle created by the main colors of a given display system, called reach.

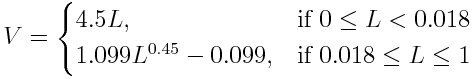

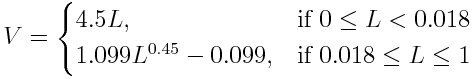

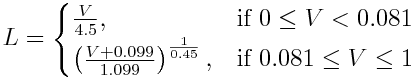

We now turn to the part of the standard for the brightness, this is where it gets a little more complicated. The standard stated that "the Overall opto-electronic transfer characteristics at source" is:

There are two problems:

It happened historically because it was believed that the display device, i.e. TV consumer is EOTF. In practice, this was carried out by adjusting the range of brightness captured in the above OETF, so that the image looked good on the reference monitor with the following EOTF:

where L = 1 corresponds to the brightness of approximately 100 CD / m2 (unit CD / m2 in this industry called "nits"). This is confirmed by the ITU in the latest versions of the standard the following comment:

Cemeteryelephants CRT

The nonlinearity of the brightness as a function of the applied voltage resulted in physically arranged as CRT monitors. By chance, this nonlinearity (very) approximately is inverted from the nonlinearity of brightness perception by a human. When we switched to digital representation of signals, this led to a good effect uniform distribution of the discretisation error over the entire range of brightness.

Rec. 709 is designed to use 8-bit or 10-bit encoding. In most of the content uses 8-bit encoding. For him, the standard specified that the distribution range of the brightness signal has to be distributed in codes 16-235.

the

As for HDR-video, in it there are two main rival: Dolby Vision and HDR10. In this article I will focus on HDR10, because it is an open standard, which quickly became popular. This standard is chosen for the Xbox One S and PS4.

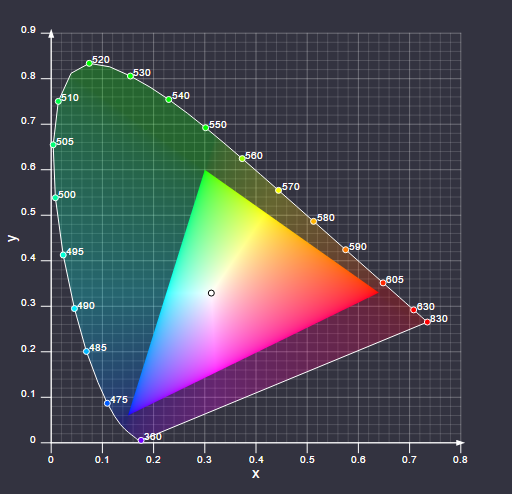

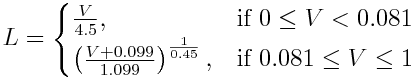

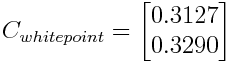

We will again start with the consideration used in HDR10 part of the chrominance color space defined in Recommendation ITU-R BT.2020 (UHDTV). It contains the following chromaticity coordinates of the primary colors:

And again as the white point D65. When visualizing the diagram xy Rec. 2020 looks like the following:

Coverage Rec. 2020

Obviously it is noticeable that the coverage of this color space is significantly larger than Rec. 709.

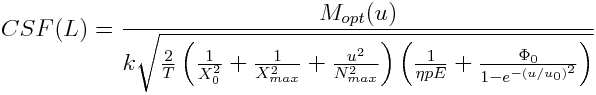

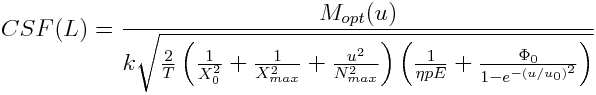

We turn now to the section of the standard of brightness, and again, the all becomes more interesting. In his PhD thesis 1999, "Contrast sensitivity of the human eye and its effect on image quality" ("Contrast sensitivity of the human eye and its effects on image quality") Peter Barten presented a little frightening equation:

(Many variables in this equation are themselves complex equations, for example, brightness is hidden inside the equations that computes E and M).

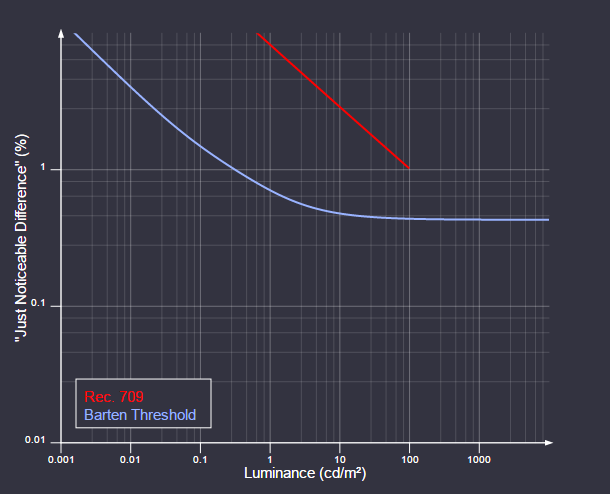

The equation determines how sensitive the eye to changes in contrast at different brightness and various parameters determine the viewing conditions and some properties of the observer. the "Minimum distinguishable difference" (Just Noticeable Difference, JND) reverse equation Bartena, therefore, to discretize EOTF to get rid of binding to the viewing conditions, the following must be true:

The society of engineers film and television (Society of Motion Picture and Television Engineers, SMPTE) decided that the equation Bartena will be a good basis for a new EOTF. The result was what we now call SMPTE ST 2084 or Perceptual Quantizer (PQ).

PQ was created by choosing conservative values for the parameters of the equation Bartena, i.e. the expected typical viewing conditions by the user. Later, the PQ was defined as sampling that for a given range of brightness and the number of samples most closely matches the equation Bartena with the selected parameters.

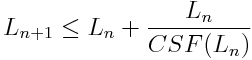

Discretized values of the EOTF can be found using the following recurrent formulas of finding k < 1. Latest value sampling will be necessary maximum brightness:

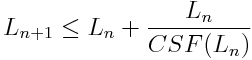

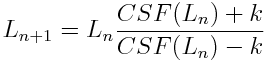

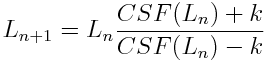

For maximum brightness 10,000 NIT using 12-bit sampling (which is used in Dolby Vision), the result looks as follows:

PQ EOTF

As you can see, the sampling does not occupy the entire range of brightness.

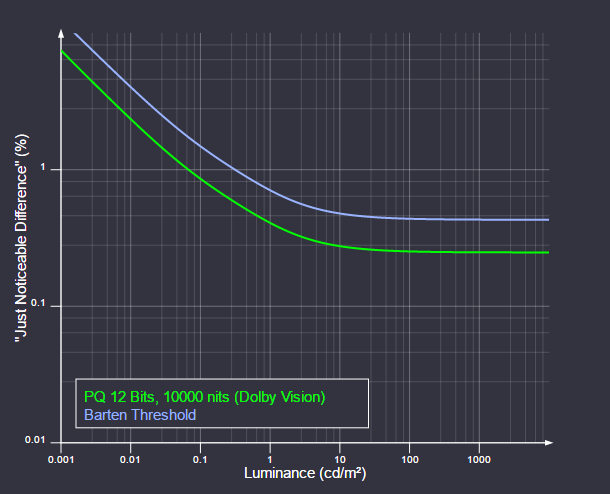

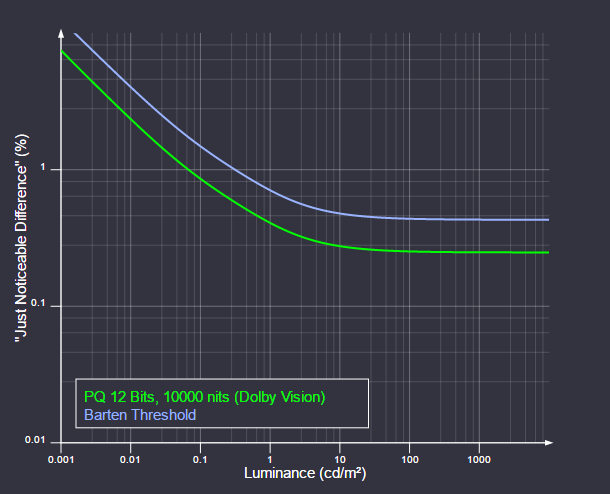

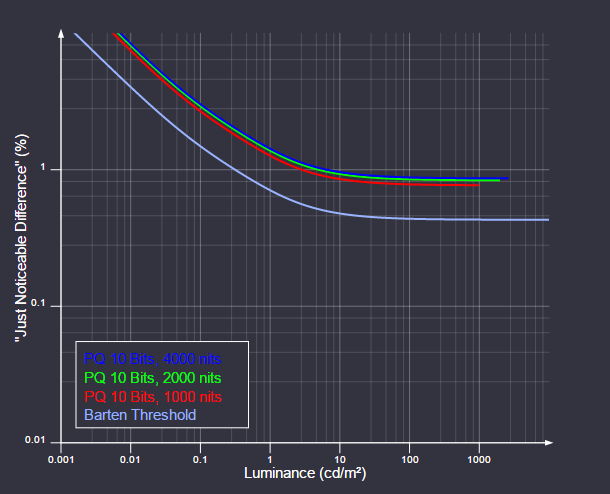

In standard HDR10 also used PQ EOTF, but with 10-bit sampling. It's not enough to stay below the threshold of Bartena in the range of brightness 10,000 NIT, but the standard allows you to embed a signal in the metadata for the dynamic regulation peak brightness. That's how 10-bit sampling PQ looks for different ranges of brightness:

Different EOTF HDR10

But even so the brightness is slightly above the threshold of Bartena. However, the situation is not as bad as it may seem from the graph because:

At the time of writing, TVs HDR10 on the market, usually have a peak brightness of 1,000 to 1,500 nits, and they need only 10 bits. It should also be noted that manufacturers of televisions can decide what to do with the brightness range they can display. Some adhere to the approach with hard pruning, others more soft.

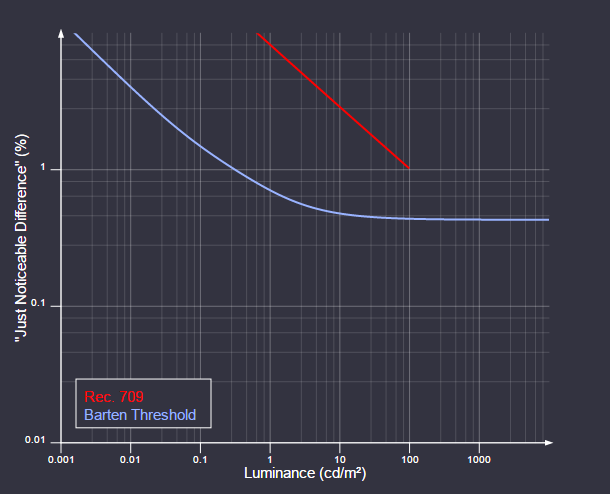

Here is an example of what it looks like 8-bit sampling of Rec. 709 with a peak brightness of 100 nits:

EOTF Rec. 709 (16-235)

As you can see, we are far above threshold Bartena, and, importantly, even the most indiscriminate buyers will adjust their television sets on significantly large 100 NIT peak brightness (usually 250-400 nits) that will raise the curve Rec. 709 even higher.

the

One of the biggest differences between the Rec. 709 and HDR is that the brightness of the latter is specified in absolute values. Theoretically, this means that content intended for HDR, will look the same on all compatible TVs. At least to their peak brightness.

There is a popular misconception that HDR content in General will be brighter, but in the General case it is not. HDR movies are more likely to be made so that the average brightness of the image was the same as that for Rec. 709, but so that the brightest parts of the image was more vivid and detailed, and so the mid-tones and shadows will be darker. In combination with the absolute values of luminance HDR, this means that for optimal viewing HDR need good conditions: in bright light, the pupil narrows, and thus, details in dark areas of the image will be more difficult to discern.

Article based on information from habrahabr.ru

the

the Pioneers of studies of color vision

Today we know that the retina of the human eye contains three different types of photoreceptor cells called cones. Each of the three types of cones contain a protein from the protein family opsins, which absorb light in different parts of the spectrum:

the Absorption of light by opsins

Cones correspond to red, green and blue parts of the spectrum and are often referred to as long (L), medium (M) and short (S) according to the wavelengths to which they are most sensitive.

One of the first scientific papers on the interaction of light and the retina was the treatise "Theory Concerning Light and Colors" by Isaac Newton, written between 1670-1675. Newton had the theory that light with different wavelengths resulted in the resonance of the retina with the same frequency; these vibrations are then transmitted via the optic nerve to the "sensorium".

"the Rays of light, falling to the bottom of the eye excite vibrations in the retina, which propagate in the fibers of the optic nerves to the brain, creating the sense of sight. Different types of rays to create waves of different forces, which according to its power to excite sensations in different colors..."

(I recommend you read the scanned drafts of Newton to the web site of the University of Cambridge. Of course, I'm stating the obvious, but how he was a genius!)

More than a hundred years Thomas Jung came to the conclusion that as the resonance frequency is a property, depending on the system, to absorb light of all frequencies, in the retina there must be an infinite number of different resonant systems. Jung considered this unlikely, and reasoned that the number is limited to one system for red, yellow and blue. These colors were traditionally used in subtractive mixing of colors. In his own words:

As for the reasons stated by Newton, it is possible that the retinal motion is oscillatory rather than wave nature, frequency should depend on the structure of its substance. As it is almost impossible to believe that each sensitive point of the retina contains an infinite number of particles, each of which can fluctuate in perfect harmony with any kind of a wave, it becomes necessary to assume that the number is limited, for example, three primary colors: red, yellow, and blue...the Suggestion of Jung relative to the retina was wrong, but he made the correct conclusion: in the eye there is a finite number of types of cells.

In 1850 Hermann Helmholtz first got the experimental proof of the theory of Jung. Helmholtz asked the subject to compare the color of different samples of light sources, adjusting the brightness of several monochromatic light sources. He came to the conclusion that for comparison of all samples is necessary and sufficient three light sources: red, green, and blue part of the spectrum.

the

the Birth of modern colorimetry

Fast forward to the beginning of the 1930s. By the time the scientific community had a reasonably good idea about the inner workings of the eye. (Although it took another 20 years to George Wald was able to experimentally confirm the presence and function of rhodopsin in the cone cells of the retina. This discovery led him to the Nobel prize for medicine in 1967.) Commission Internationale de L'eclairage (international Commission on illumination), CIE, has set the task of creating a comprehensive quantitative assessment of color perception by person. Quantification was based on experimental data collected by William David Wright and John Guild in the parameters, similar to that selected for the first time, Hermann Helmholtz. The base settings were chosen 435,8 nm for blue, 546,1 nm for green and 700 nm for red.

Experimental setup John Gilda, three knobs adjust the primary colors

Due to the significant overlap of the sensitivity of M and L cones was impossible to correlate the selected wavelengths with blue-green portion of the spectrum. For "mapping" these colors as a starting point it was necessary to add a little basic red:

If we for a moment imagine that all the primary colors make a negative contribution, the equation can be rewritten as follows:

The result of the experiments was the table of the RGB triad for each wavelength that is visible as follows:

mapping of RGB colors in the CIE 1931

Of course, color with the negative red component cannot be displayed using the primary colors CIE.

Now we can find trichrome coefficients for the light distribution of the spectral intensity S as the following inner product:

It may seem obvious that the sensitivity to different wavelengths it is possible to integrate in this way, but it actually depends on the physical sensitivity of the eye, linear with respect to the sensitivity wavelengths. This was empirically confirmed in 1853 by Hermann Grassmann, and the above integrals in modern formulation, known as the law of Grassman.

The term "color space" arose because the primary colors (red, green, and blue) can be considered as a basis of a vector space. In this space the different colors perceived by the person represented by the rays emanating from the source. The modern definition of a vector space introduced in 1888 Giuseppe Peano, but more than 30 years before James Clerk Maxwell had already used only originated the theory that later became linear algebra for the formal description trichromatism color system.

CIE decided that to simplify the calculations it will be more convenient to work with color space in which the coefficients of the primary colors is always positive. Three new primary colors is expressed in the coordinates of the RGB color space as follows:

This new set of primary colors is impossible to implement in the physical world. It's just a mathematical tool that simplifies working with color space. In addition to the coefficients of the primary colors have always been positive, the new space is arranged in such a way that the coefficient of the color Y corresponds to the perceived brightness. This feature is known as brightness CIE (more about it can be read in the great article Color FAQ Charles Poynton (Charles between poynton)).

To simplify the visualization of the resulting color space, we perform the last conversion. Dividing each component by the amount of components we get a dimensionless quantity color, independent of its brightness:

The coordinates x and y known as chromaticity coordinates, and together with the CIE luminance Y they constitute the color space is CIE xyY. If we plot on a graph the chromaticity coordinates of all colors with a given luminance, we get the following diagram, which you will be familiar:

Diagram of CIE 1931 xyY

And the last thing you need to know — that white is considered a color of the color space. In this system, display white color is the x and y coordinates of the colors that are obtained when all the coefficients of the primary colors RGB are equal.

Over time, several new color spaces, which in various aspects has made improvements in the CIE 1931 space. Despite this, the CIE xyY system is the most popular color space that describes the properties of the display device.

the

Transfer function

Before considering the standards, it is necessary to introduce and explain two more concepts.

Opto-electronic transfer function

Opto-electronic transfer function (optical-electronic transfer function, OETF) determines how linear the light recorded by the device (camera) must be encoded in the signal, i.e. it is a function of the form:

Before V was an analog signal, but now, of course, it has digital encoding. Usually game developers are rarely faced with OETF. One example in which the function is important: the need to combine in-game video with computer graphics. In this case, you must know what OETF was recorded video, to restore the linear light and to properly blend it with computer imaging.

electro-optical transfer function

Electro-optical transfer function (electronic-optical transfer EOTF) OETF performs the opposite task, i.e. it determines how the signal will be converted into a linear light:

This feature is more important for game developers, because it defines how their content will display television screens and monitors users.

the Relationship between EOTF and OETF

The concept of the EOTF and OETF, though related, but serve different purposes. OETF are needed to represent the captured scene, from which we then can reconstruct the original linear lighting (this concept is the frame buffer HDR (High Dynamic Range) normal game). What happens at the stages of production of conventional film:

the

-

the

- data Capture scenes the

- invert the OETF to restore the values of the linear lighting the

- colour Correction the

- Mastering under various target formats (DCI-P3, Rec. 709, HDR10, Dolby Vision, etc.):

the-

the

- Reducing the dynamic range of the material to match the dynamic range of the target format (tone mapping) the

- Convert the color space of the target format the

- Invert EOTF for the material (when using the EOTF to the display device the image is restored as needed).

A detailed discussion of this process will not be included in our article, but I recommend to study detailed formal description of the workflow ACES (Academy Color Encoding System).

Until now the standard process of the game is as follows:

the

-

the

- Rendering the

- frame Buffer HDR the

- Tonal correction the

- Invert EOTF for the intended display device (usually sRGB) the

- colour Correction

In most game engines use a method of color correction, the popularized presentation of Neti Hoffman (Naty Hoffman) "Color Enhancement for Videogames" from Siggraph 2010. This method was practical when used only the target SDR (Standard Dynamic Range), and he was allowed to use color correction software already installed on the computers of most artists, such as Adobe Photoshop.

the Standard workflow of color correction SDR (image belongs to Jonathan blow (Jonathan Blow))

After the introduction of HDR most of the games began to move to the process similar to that used in film production. Even in the absence of HDR is similar to the cinematic process allowed us to optimize the performance. Color correction in HDR means that you have the whole dynamic range of the scene. In addition, be possible some effects that were previously unavailable.

Now we are ready to consider different standards currently used to describe the formats of televisions.

the

Standards

Rec. 709

Most of the standards related to the broadcasting of the video, released by the International telecommunication Union (International Telecommunication Union, ITU), a UN body, is mainly engaged in information technology.

recommendation ITU-R BT.709, more commonly called Rec. 709 is a standard that describes the properties of HDTV. The first version of the standard was released in 1990, the last in June 2015. The standard defines parameters such as aspect ratio, resolution, frame rate. These characteristics are familiar to most people, so I will not consider them and focus on the parts of the standard relating to color reproduction and brightness.

The standard describes in detail the color, the limited color space is CIE xyY. Red, green and blue light sources corresponding to the standard display should be chosen in such a way that they separate the chromaticity coordinates were as follows:

Their relative intensity should be set up so that white dot had a color

(This white point is also known as CIE Standard Illuminant D65 and the same seizure of the chromaticity coordinates of the spectral distribution of the intensity of normal sunlight.)

Properties of color can be visually represented as follows:

Coverage Rec. 709

The diagram area of color bounded by the triangle created by the main colors of a given display system, called reach.

We now turn to the part of the standard for the brightness, this is where it gets a little more complicated. The standard stated that "the Overall opto-electronic transfer characteristics at source" is:

There are two problems:

-

the

- there are No specifications about what corresponds to the physical luminance L = 1

Despite the fact that it is the standard for broadcast video, it is not specified EOTF

It happened historically because it was believed that the display device, i.e. TV consumer is EOTF. In practice, this was carried out by adjusting the range of brightness captured in the above OETF, so that the image looked good on the reference monitor with the following EOTF:

where L = 1 corresponds to the brightness of approximately 100 CD / m2 (unit CD / m2 in this industry called "nits"). This is confirmed by the ITU in the latest versions of the standard the following comment:

In a standard production practice, the function of encoding the image sources is adjusted in such a way that the final image had the required form, corresponding to appear on the reference monitor. The reference is accepted the decoding function from Recommendation ITU-R BT.1886. The reference viewing environment is specified in Recommendation ITU-R BT.2035.Rec. 1886 is the result of the documentation of the characteristics of CRT monitors (standard published in 2011), i.e., is a formalization of existing practices.

Cemetery

The nonlinearity of the brightness as a function of the applied voltage resulted in physically arranged as CRT monitors. By chance, this nonlinearity (very) approximately is inverted from the nonlinearity of brightness perception by a human. When we switched to digital representation of signals, this led to a good effect uniform distribution of the discretisation error over the entire range of brightness.

Rec. 709 is designed to use 8-bit or 10-bit encoding. In most of the content uses 8-bit encoding. For him, the standard specified that the distribution range of the brightness signal has to be distributed in codes 16-235.

the

HDR10

As for HDR-video, in it there are two main rival: Dolby Vision and HDR10. In this article I will focus on HDR10, because it is an open standard, which quickly became popular. This standard is chosen for the Xbox One S and PS4.

We will again start with the consideration used in HDR10 part of the chrominance color space defined in Recommendation ITU-R BT.2020 (UHDTV). It contains the following chromaticity coordinates of the primary colors:

And again as the white point D65. When visualizing the diagram xy Rec. 2020 looks like the following:

Coverage Rec. 2020

Obviously it is noticeable that the coverage of this color space is significantly larger than Rec. 709.

We turn now to the section of the standard of brightness, and again, the all becomes more interesting. In his PhD thesis 1999, "Contrast sensitivity of the human eye and its effect on image quality" ("Contrast sensitivity of the human eye and its effects on image quality") Peter Barten presented a little frightening equation:

(Many variables in this equation are themselves complex equations, for example, brightness is hidden inside the equations that computes E and M).

The equation determines how sensitive the eye to changes in contrast at different brightness and various parameters determine the viewing conditions and some properties of the observer. the "Minimum distinguishable difference" (Just Noticeable Difference, JND) reverse equation Bartena, therefore, to discretize EOTF to get rid of binding to the viewing conditions, the following must be true:

The society of engineers film and television (Society of Motion Picture and Television Engineers, SMPTE) decided that the equation Bartena will be a good basis for a new EOTF. The result was what we now call SMPTE ST 2084 or Perceptual Quantizer (PQ).

PQ was created by choosing conservative values for the parameters of the equation Bartena, i.e. the expected typical viewing conditions by the user. Later, the PQ was defined as sampling that for a given range of brightness and the number of samples most closely matches the equation Bartena with the selected parameters.

Discretized values of the EOTF can be found using the following recurrent formulas of finding k < 1. Latest value sampling will be necessary maximum brightness:

For maximum brightness 10,000 NIT using 12-bit sampling (which is used in Dolby Vision), the result looks as follows:

PQ EOTF

As you can see, the sampling does not occupy the entire range of brightness.

In standard HDR10 also used PQ EOTF, but with 10-bit sampling. It's not enough to stay below the threshold of Bartena in the range of brightness 10,000 NIT, but the standard allows you to embed a signal in the metadata for the dynamic regulation peak brightness. That's how 10-bit sampling PQ looks for different ranges of brightness:

Different EOTF HDR10

But even so the brightness is slightly above the threshold of Bartena. However, the situation is not as bad as it may seem from the graph because:

-

the

- logarithmic Curve, so the relative error is actually not so great the

- do Not forget that the options are taken to create Bartena threshold is chosen conservatively.

At the time of writing, TVs HDR10 on the market, usually have a peak brightness of 1,000 to 1,500 nits, and they need only 10 bits. It should also be noted that manufacturers of televisions can decide what to do with the brightness range they can display. Some adhere to the approach with hard pruning, others more soft.

Here is an example of what it looks like 8-bit sampling of Rec. 709 with a peak brightness of 100 nits:

EOTF Rec. 709 (16-235)

As you can see, we are far above threshold Bartena, and, importantly, even the most indiscriminate buyers will adjust their television sets on significantly large 100 NIT peak brightness (usually 250-400 nits) that will raise the curve Rec. 709 even higher.

the

In conclusion

One of the biggest differences between the Rec. 709 and HDR is that the brightness of the latter is specified in absolute values. Theoretically, this means that content intended for HDR, will look the same on all compatible TVs. At least to their peak brightness.

There is a popular misconception that HDR content in General will be brighter, but in the General case it is not. HDR movies are more likely to be made so that the average brightness of the image was the same as that for Rec. 709, but so that the brightest parts of the image was more vivid and detailed, and so the mid-tones and shadows will be darker. In combination with the absolute values of luminance HDR, this means that for optimal viewing HDR need good conditions: in bright light, the pupil narrows, and thus, details in dark areas of the image will be more difficult to discern.

Комментарии

Отправить комментарий